Difference between revisions of "Assignment 3 - FAQ"

| (7 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== Frequently Asked Questions == | == Frequently Asked Questions == | ||

=== Computing relative frequencies === | |||

For relative frequency, you have to consider the number of co-occurrences *across* multiple documents. | |||

Here's an example (1 document per line): | |||

<code> | |||

The lazy dog the | |||

I like the dog | |||

I'm rob the | |||

He dog the | |||

</code> | |||

First, you need to compute the co-occurrences for each term: | |||

<code> | |||

lazy the:1 The:1 dog:1 | |||

like I:1 the:1 dog:1 | |||

I the:1 like:1 dog:1 | |||

rob I'm:1 the:1 | |||

dog lazy:1 like:1 I:1 the:3 The:1 He:1 | |||

I'm rob:1 the:1 | |||

the lazy:1 like:1 I:1 rob:1 dog:3 The:1 I'm:1 He:1 | |||

The the:1 lazy:1 dog:1 | |||

He the:1 dog:1 | |||

</code> | |||

Once you have these, computing the relative frequencies is easy: | |||

* f(he|dog) = 1 / 8 -- because (dog,he) appear only once, and dog appears in 1 + 1 + 1 + 3 + 1 + 1 pairs. | |||

* f(the | lazy) = 1 / 3 -- here I am assuming the != The | |||

=== Associative Collections in Python === | |||

For the stripes implementation: Python has a 'dictionary' (associative collection) that can be helpful -- you can actually store each stripe as a dictionary entry. | |||

A dictionaries is like a list, but while in lists the keys are integers, in a dictionary you can use a string or a tuple. | |||

Here's an example: | |||

<code> | |||

m = {} #Initialize an empty map | |||

m['jj'] = {} #Initialize an empty map for term "jj" | |||

m['jj']['ii'] = 1 # Associate with "jj" ('ii', 1) | |||

m['jj']['ff'] = 1 # Associate with "jj" ('ff', 1) | |||

m['jj']['ii'] += 1 # Increment the value in the tuple with key 'ii' by 1 | |||

print m # this outputs: {'jj': {'ii': 2, 'ff': 1}} | |||

</code> | |||

=== Hadoop Streaming === | |||

* For an overview, see https://hadoop.apache.org/docs/r0.19.1/streaming.html | |||

=== How do I specify which subset of a key to be used by the partitioner? === | === How do I specify which subset of a key to be used by the partitioner? === | ||

| Line 16: | Line 86: | ||

hadoop jar /usr/bin/hadoop/contrib/streaming/hadoop-streaming-1.0.3.16.jar -D mapred.reduce.tasks=2 -D stream.num.map.output.key.fields=2 -D num.key.fields.for.partition=1 -file wordMatrix_mapperPairs.py -mapper wordMatrix_mapperPairs.py -file wordMatrix_reducerPairs.py -reducer wordMatrix_reducerPairs.py -partitioner org.apache.hadoop.mapred.lib.KeyFieldBasedPartitioner -input /user/juliana/input -output /user/juliana/output2 | hadoop jar /usr/bin/hadoop/contrib/streaming/hadoop-streaming-1.0.3.16.jar -D mapred.reduce.tasks=2 -D stream.num.map.output.key.fields=2 -D num.key.fields.for.partition=1 -file wordMatrix_mapperPairs.py -mapper wordMatrix_mapperPairs.py -file wordMatrix_reducerPairs.py -reducer wordMatrix_reducerPairs.py -partitioner org.apache.hadoop.mapred.lib.KeyFieldBasedPartitioner -input /user/juliana/input -output /user/juliana/output2 | ||

</code> | </code> | ||

** '''num.key.fields.for.partition=1''' specifies that | ** '''num.key.fields.for.partition=1''' specifies that one field is to be used by the partitioner. | ||

=== How do I specify which subset of a key to be used by the partitioner on AWS? === | === How do I specify which subset of a key to be used by the partitioner on AWS? === | ||

| Line 29: | Line 99: | ||

* And you can download all the necessary files from | * And you can download all the necessary files from | ||

http://vgc.poly.edu/~juliana/courses/BigData2014/Lectures/MapReduceExample/mr-example.tgz | http://vgc.poly.edu/~juliana/courses/BigData2014/Lectures/MapReduceExample/mr-example.tgz | ||

=== How do I run a mapreduce job on the NYU Hadoop Cluster? === | |||

* I have created instructions on how to run the Mapreduce example you worked with in the last lab session on this cluster. You can find the instructions at: http://vgc.poly.edu/~juliana/courses/BigData2014/Lectures/MapReduceExample/readme-nyu-hadoop.txt | |||

* Note: in the first version of this file, there was an extraneous space in '''alias hjs='/usr/bin/hadoop jar $HAS/$HSJ''''. There should be no spaces around "=" | |||

=== How do I test mapreduce code on my local machine? === | |||

* You can use unix pipes, e.g., cat "samplefilename" | mapper.py | reducer.py | |||

See details in http://www.michael-noll.com/tutorials/writing-an-hadoop-mapreduce-program-in-python | |||

=== When I try to run the actual MapReduce job on the NYU cluster I get an error: Exception in thread "main" java.io.IOException: Error opening job jar: / === | |||

* The error "Error opening job jar: /" seems to indicate hadoop is looking for the jar file in the root directory "/" | |||

* Try issuing the *full* command without using the alias: | |||

<code> | |||

$ /usr/bin/hadoop jar /usr/lib/hadoop/contrib/streaming/hadoop-streaming-1.0.3.16.jar -file pmap.py -mapper pmap.py -file pred.py -reducer pred.py -input /user/juliana/wikipedia.txt -output /user/juliana/wikipedia.output | |||

</code> | |||

Latest revision as of 16:00, 15 April 2014

Frequently Asked Questions

Computing relative frequencies

For relative frequency, you have to consider the number of co-occurrences *across* multiple documents. Here's an example (1 document per line):

The lazy dog the

I like the dog

I'm rob the

He dog the

First, you need to compute the co-occurrences for each term:

lazy the:1 The:1 dog:1

like I:1 the:1 dog:1

I the:1 like:1 dog:1

rob I'm:1 the:1

dog lazy:1 like:1 I:1 the:3 The:1 He:1

I'm rob:1 the:1

the lazy:1 like:1 I:1 rob:1 dog:3 The:1 I'm:1 He:1

The the:1 lazy:1 dog:1

He the:1 dog:1

Once you have these, computing the relative frequencies is easy:

- f(he|dog) = 1 / 8 -- because (dog,he) appear only once, and dog appears in 1 + 1 + 1 + 3 + 1 + 1 pairs.

- f(the | lazy) = 1 / 3 -- here I am assuming the != The

Associative Collections in Python

For the stripes implementation: Python has a 'dictionary' (associative collection) that can be helpful -- you can actually store each stripe as a dictionary entry.

A dictionaries is like a list, but while in lists the keys are integers, in a dictionary you can use a string or a tuple. Here's an example:

m = {} #Initialize an empty map

m['jj'] = {} #Initialize an empty map for term "jj"

m['jj']['ii'] = 1 # Associate with "jj" ('ii', 1)

m['jj']['ff'] = 1 # Associate with "jj" ('ff', 1)

m['jj']['ii'] += 1 # Increment the value in the tuple with key 'ii' by 1

print m # this outputs: {'jj': {'ii': 2, 'ff': 1}}

Hadoop Streaming

- For an overview, see https://hadoop.apache.org/docs/r0.19.1/streaming.html

How do I specify which subset of a key to be used by the partitioner?

- Hadoop Streaming provides an option for you to modify the partitioning strategy

- Here's an example:

hadoop jar /usr/bin/hadoop/contrib/streaming/hadoop-streaming-1.0.3.16.jar -D mapred.reduce.tasks=2 -D stream.num.map.output.key.fields=2 -D num.key.fields.for.partition=2 -file wordMatrix_mapperPairs.py -mapper wordMatrix_mapperPairs.py -file wordMatrix_reducerPairs.py -reducer wordMatrix_reducerPairs.py -partitioner org.apache.hadoop.mapred.lib.KeyFieldBasedPartitioner -input /user/juliana/input -output /user/juliana/output2

- stream.num.map.output.key.fields=2 informs Hadoop that the first 2 fields of the mapper output form the key -- in this case (word1,word2), and the third field corresponds to the value.

- num.key.fields.for.partition=2 specifies that both fields are to be used by the partitioner.

- Note that we also need to specify the partitioner: -partitioner org.apache.hadoop.mapred.lib.KeyFieldBasedPartitioner

- Here's another example, now using only the first field as the key:

hadoop jar /usr/bin/hadoop/contrib/streaming/hadoop-streaming-1.0.3.16.jar -D mapred.reduce.tasks=2 -D stream.num.map.output.key.fields=2 -D num.key.fields.for.partition=1 -file wordMatrix_mapperPairs.py -mapper wordMatrix_mapperPairs.py -file wordMatrix_reducerPairs.py -reducer wordMatrix_reducerPairs.py -partitioner org.apache.hadoop.mapred.lib.KeyFieldBasedPartitioner -input /user/juliana/input -output /user/juliana/output2

- num.key.fields.for.partition=1 specifies that one field is to be used by the partitioner.

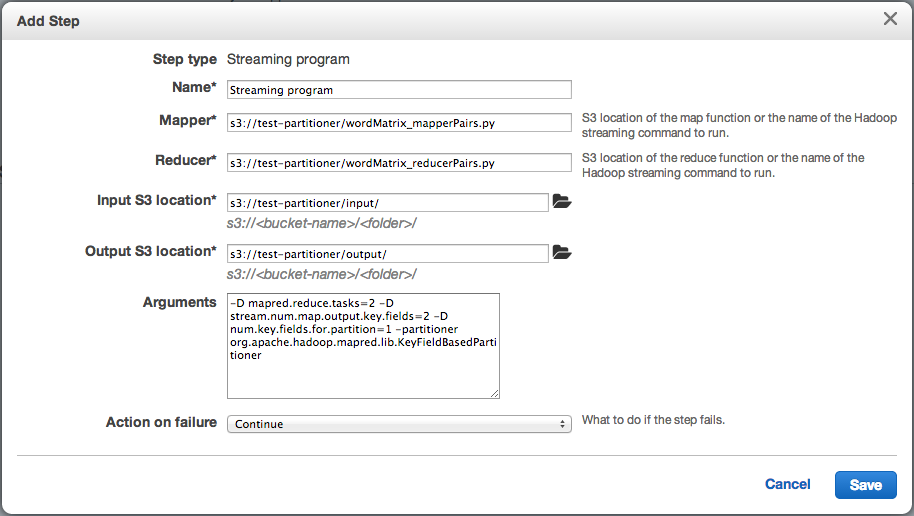

How do I specify which subset of a key to be used by the partitioner on AWS?

- You can do this when you configure a step, by adding the appropriate directives and parameters in the Arguments box:

How do I run a mapreduce job on a single-node installation?

- I have created an example and detailed instructions on how to run a mapreduce job on a single-node installation.

The instructions are in: http://vgc.poly.edu/~juliana/courses/BigData2014/Lectures/MapReduceExample/readme.txt

- And you can download all the necessary files from

http://vgc.poly.edu/~juliana/courses/BigData2014/Lectures/MapReduceExample/mr-example.tgz

How do I run a mapreduce job on the NYU Hadoop Cluster?

- I have created instructions on how to run the Mapreduce example you worked with in the last lab session on this cluster. You can find the instructions at: http://vgc.poly.edu/~juliana/courses/BigData2014/Lectures/MapReduceExample/readme-nyu-hadoop.txt

- Note: in the first version of this file, there was an extraneous space in alias hjs='/usr/bin/hadoop jar $HAS/$HSJ'. There should be no spaces around "="

How do I test mapreduce code on my local machine?

- You can use unix pipes, e.g., cat "samplefilename" | mapper.py | reducer.py

See details in http://www.michael-noll.com/tutorials/writing-an-hadoop-mapreduce-program-in-python

When I try to run the actual MapReduce job on the NYU cluster I get an error: Exception in thread "main" java.io.IOException: Error opening job jar: /

- The error "Error opening job jar: /" seems to indicate hadoop is looking for the jar file in the root directory "/"

- Try issuing the *full* command without using the alias:

$ /usr/bin/hadoop jar /usr/lib/hadoop/contrib/streaming/hadoop-streaming-1.0.3.16.jar -file pmap.py -mapper pmap.py -file pred.py -reducer pred.py -input /user/juliana/wikipedia.txt -output /user/juliana/wikipedia.output