Difference between revisions of "Assignment 3 - FAQ"

| Line 8: | Line 8: | ||

hadoop jar /usr/bin/hadoop/contrib/streaming/hadoop-streaming-1.0.3.16.jar -D mapred.reduce.tasks=2 -D stream.num.map.output.key.fields=2 -D num.key.fields.for.partition=2 -file wordMatrix_mapperPairs.py -mapper wordMatrix_mapperPairs.py -file wordMatrix_reducerPairs.py -reducer wordMatrix_reducerPairs.py -partitioner org.apache.hadoop.mapred.lib.KeyFieldBasedPartitioner -input /user/juliana/input -output /user/juliana/output2 | hadoop jar /usr/bin/hadoop/contrib/streaming/hadoop-streaming-1.0.3.16.jar -D mapred.reduce.tasks=2 -D stream.num.map.output.key.fields=2 -D num.key.fields.for.partition=2 -file wordMatrix_mapperPairs.py -mapper wordMatrix_mapperPairs.py -file wordMatrix_reducerPairs.py -reducer wordMatrix_reducerPairs.py -partitioner org.apache.hadoop.mapred.lib.KeyFieldBasedPartitioner -input /user/juliana/input -output /user/juliana/output2 | ||

</code> | </code> | ||

** '''stream.num.map.output.key.fields=2''' informs Hadoop that the first 2 fields of the mapper output form the key -- in this case (word1,word2), and the third field corresponds to the value. | ** '''stream.num.map.output.key.fields=2''' informs Hadoop that the first 2 fields of the mapper output form the key -- in this case (word1,word2), and the third field corresponds to the value. | ||

** '''num.key.fields.for.partition=2''' specifies that both fields are to be used by the partitioner. | ** '''num.key.fields.for.partition=2''' specifies that both fields are to be used by the partitioner. | ||

| Line 17: | Line 16: | ||

hadoop jar /usr/bin/hadoop/contrib/streaming/hadoop-streaming-1.0.3.16.jar -D mapred.reduce.tasks=2 -D stream.num.map.output.key.fields=2 -D num.key.fields.for.partition=1 -file wordMatrix_mapperPairs.py -mapper wordMatrix_mapperPairs.py -file wordMatrix_reducerPairs.py -reducer wordMatrix_reducerPairs.py -partitioner org.apache.hadoop.mapred.lib.KeyFieldBasedPartitioner -input /user/juliana/input -output /user/juliana/output2 | hadoop jar /usr/bin/hadoop/contrib/streaming/hadoop-streaming-1.0.3.16.jar -D mapred.reduce.tasks=2 -D stream.num.map.output.key.fields=2 -D num.key.fields.for.partition=1 -file wordMatrix_mapperPairs.py -mapper wordMatrix_mapperPairs.py -file wordMatrix_reducerPairs.py -reducer wordMatrix_reducerPairs.py -partitioner org.apache.hadoop.mapred.lib.KeyFieldBasedPartitioner -input /user/juliana/input -output /user/juliana/output2 | ||

</code> | </code> | ||

** '''num.key.fields.for.partition=1''' specifies that both fields are to be used by the partitioner. | ** '''num.key.fields.for.partition=1''' specifies that both fields are to be used by the partitioner. | ||

| Line 24: | Line 22: | ||

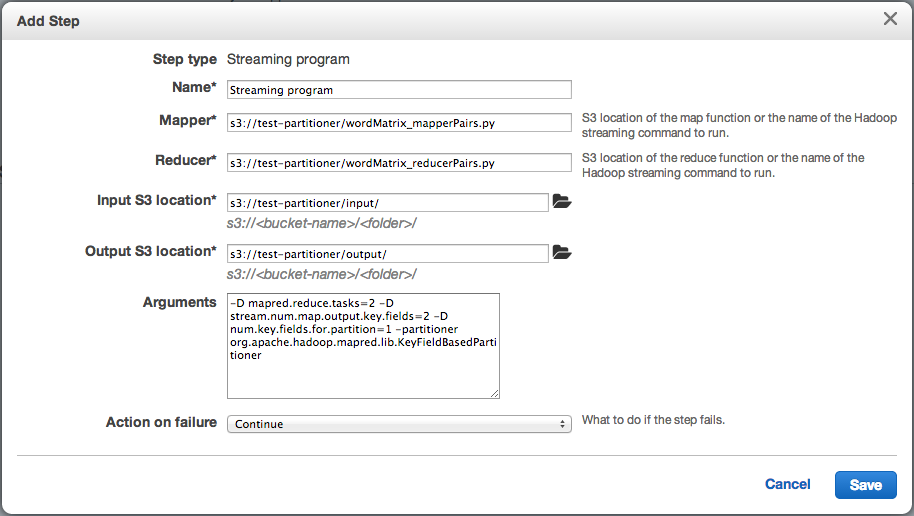

* You can do this when you configure a step, by adding the appropriate directives and parameters in the Arguments box: | * You can do this when you configure a step, by adding the appropriate directives and parameters in the Arguments box: | ||

[[File:emr-partitioner.png]] | [[File:emr-partitioner.png]] | ||

=== How do I run a mapreduce job on a single-node installation? === | |||

*I have created an example and detailed instructions on how to run a mapreduce job on a single-node installation. | |||

The instructions are in: http://vgc.poly.edu/~juliana/courses/BigData2014/Lectures/MapReduceExample/readme.txt | |||

* And you can download all the necessary files from | |||

http://vgc.poly.edu/~juliana/courses/BigData2014/Lectures/MapReduceExample/mr-example.tgz | |||

Revision as of 04:12, 11 April 2014

Frequently Asked Questions

How do I specify which subset of a key to be used by the partitioner?

- Hadoop Streaming provides an option for you to modify the partitioning strategy

- Here's an example:

hadoop jar /usr/bin/hadoop/contrib/streaming/hadoop-streaming-1.0.3.16.jar -D mapred.reduce.tasks=2 -D stream.num.map.output.key.fields=2 -D num.key.fields.for.partition=2 -file wordMatrix_mapperPairs.py -mapper wordMatrix_mapperPairs.py -file wordMatrix_reducerPairs.py -reducer wordMatrix_reducerPairs.py -partitioner org.apache.hadoop.mapred.lib.KeyFieldBasedPartitioner -input /user/juliana/input -output /user/juliana/output2

- stream.num.map.output.key.fields=2 informs Hadoop that the first 2 fields of the mapper output form the key -- in this case (word1,word2), and the third field corresponds to the value.

- num.key.fields.for.partition=2 specifies that both fields are to be used by the partitioner.

- Note that we also need to specify the partitioner: -partitioner org.apache.hadoop.mapred.lib.KeyFieldBasedPartitioner

- Here's another example, now using only the first field as the key:

hadoop jar /usr/bin/hadoop/contrib/streaming/hadoop-streaming-1.0.3.16.jar -D mapred.reduce.tasks=2 -D stream.num.map.output.key.fields=2 -D num.key.fields.for.partition=1 -file wordMatrix_mapperPairs.py -mapper wordMatrix_mapperPairs.py -file wordMatrix_reducerPairs.py -reducer wordMatrix_reducerPairs.py -partitioner org.apache.hadoop.mapred.lib.KeyFieldBasedPartitioner -input /user/juliana/input -output /user/juliana/output2

- num.key.fields.for.partition=1 specifies that both fields are to be used by the partitioner.

How do I specify which subset of a key to be used by the partitioner on AWS?

- You can do this when you configure a step, by adding the appropriate directives and parameters in the Arguments box:

How do I run a mapreduce job on a single-node installation?

- I have created an example and detailed instructions on how to run a mapreduce job on a single-node installation.

The instructions are in: http://vgc.poly.edu/~juliana/courses/BigData2014/Lectures/MapReduceExample/readme.txt

- And you can download all the necessary files from

http://vgc.poly.edu/~juliana/courses/BigData2014/Lectures/MapReduceExample/mr-example.tgz