Difference between revisions of "Provenance challenge"

| Line 21: | Line 21: | ||

[[Image:pc_model_er.gif]] | [[Image:pc_model_er.gif]] | ||

== | == API Primitives == | ||

The | |||

The API consists of a set of basic primitives for querying workflow definitions and executions. | |||

Revision as of 05:20, 3 May 2007

Second provenance challenge design overview

This page describes our approach to answer the queries of the Second Provenance Challenge

We have developed a new, general API for querying provenance models used by different scientific workflow systems.

Scientific Workflow Provenance Data Model (SWPDM): Overview

The SWDM aims to capture entities and relationships that are relevant to both the definition and execution of workflows. The goal is to define a general model that is able to represent provenance information obtained by different workflow systems.

Module definition is a description of a processor that takes inputs and generates outputs.

Workflow definition is a description of a simple workflow that contains modules and connections between them through ports. It also contains the evolution of the workflow through a parent relation as used by VisTrails.

Execution log is the information about a workflow execution. It contains information about the processors that were executed and the data items that were created.

API Primitives

The API consists of a set of basic primitives for querying workflow definitions and executions.

node types:

| name | description |

|---|---|

| dataitem | a dataitem that is input/output to a module execution |

| module | the module/service that is to be executed |

| moduleInstance | the module as represented in a workflow |

| moduleExecution | the execution of a module |

| workflow | a description of a process containing modules and connections |

| workflowExecution | the representation of a workflow execution |

| inputPort | represents a specific port thas can be assigned an input to a module execution |

| outputPort | represents a specific port thas can contain a product of a module execution |

| connection | represents a connection between module Instances |

Relations

The basic relations between the primitives is shown in the diagram above. However few of the current provenance systems contains all of this information. Some may lack information about produced data items or miss the definition of a workflow. The goal is to extract as much information as possible from each source.

In addition, for the information contained in each primitive, we suggest using an annotation relation for extracting the information. It should be possible to add custom annotations to all primitives. Widely used information like execution start and end-time might be implemented as separate functions.

Upstream

The most common use of provenance data will be to perform the transitive closure of some connected executions or data items i.e. to track data dependencies back and forward in time. We call this upstream for tracking back in time and downstream for tracking forward in time.

We have identified 4 primitives to which upstream/downstream tracking is relevant:

| primitive | description |

|---|---|

| dataitem | tracking data dependencies |

| moduleExecution | tracking execution dependencies |

| moduleInstances | tracking module dependencies within a workflow |

| workflow | tracking workflow design history e.g. different workflow versions in the VisTrails action tree |

For a general transitive function we propose:

- traverse(start, end, limit, stop)

The definition is: start is the start point and end is the endpoint. Limit is the max number of steps to search where 0 means no limit. Stop is an optional list of nodes which should not be explored further. 'Stop' is needed by some queries in the challenge and also adds to the expressiveness of the queries.

The function shold return all elements between start and end with a maximum length of 'limit' and excluding branches after 'stop'. If start or end is omitted e.g. '*', the function should continue to traverse until no more results are found or limit is reached.

Examples:

- traverse(start, *, 0) (downstream)

- traverse(*, end, 0) (upstream)

- traverse(start, end, 0) (find all elements in between)

- traverse(*, end, 0, softmean) (upstream excluding nodes after softmean)

There should also exist a way of checking if two nodes are related to each other

- related(start,end,limit) (Return true if a path exist between the nodes)

Implementation

The implementation is done in python. Currently the API has been partially implemented for XML using XPath and RDF using SPARQL.

There are currently three ways to implement the transitive closure:

- Natively in the Query processor (not implemented yet)

- As an extra graph structure as described below

- An implementation in python using basic relation queries on the source. (Very slow)

For small data stores any implementation is possible. For large data stores (2) might not be possible. If (1) is not possible in the query engine, your only choice is (3), which might be very slow. An assumption would be that an implementation of a provenance store would probably support (1). Or implement its own version of (2).

For query processors not capable of transitive closure (like the ones above), we have implemented a Graph structure. During initialization it loads the transitive relations in the data store. All upstream/downstram queries will then be directed to that Graph structure for fast computation.

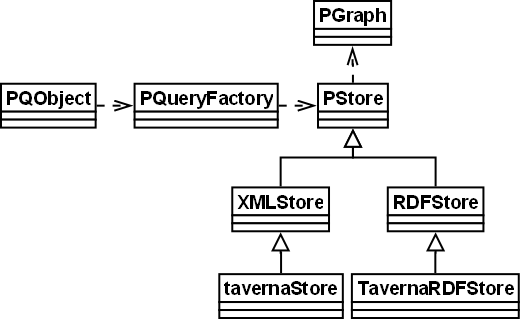

System overview

The structure of the system can be summarized in the figure below.

PQObject represents a node in the provenance data by storing its id and namespace (Its PId). It can call methods in PQueryFactory to traverse the data as a graph. PQueryFactory contains the sources and forwards queries to the correct source by comparing namespaces.

All data source inherits from PStore. It contains functions implementing data aliases, execution dependencies in other sources and sending queries to the right functions. It also provides access to PGraph that implements a structure for storing and querying transitive relations. This structure can be used if the query engine does not support efficient querying of transitive closure. It works by loading the transitive data during initialization and then direct all transitive queries to it. It is thus not suited for very large data.

There are currently 2 classes implementing specific data type access: XMLStore implements loading of an XML data file and provides access through XPath. RDFStore implements access to an RDF server using SPARQL. Other data types i.e. relational DB can be implemented in a similar way.

The figure shows TavernaStore and TavernaRDFStore. They are both implementing the API for the MyGrid team data files. The MyGrid Taverna data is in XML/RDF which makes it possible to process it using both XPath and SPARQL. Having two versions makes comparison between implementations easy without having to worry about different data formats.

Another partially implemented XML source is the Southampton team.

Possible edges in the data model

| \ | W | I | C | M | O | I | R | E | D | A |

|---|---|---|---|---|---|---|---|---|---|---|

| W | X | X | X | X | X | |||||

| I | X | X | X | X | X | |||||

| C | X | X | X | X | ||||||

| M | X | X | X | |||||||

| O | X | X | ||||||||

| I | X | X | ||||||||

| R | X | X | X | |||||||

| E | X | X | X | |||||||

| D | X | X | X | |||||||

| A |

Legend: W=Workflow,I=Instance,C=Connection,M=Module,O=OutputPort,I=InputPort,R=Run,E=Execution,D=DataItem,A=Annotation

The upper part is normal edges. The lower part is secondary edges.

Edges between same types of dataitem creates a need to specify the edge type, i.e. different types of edges. The execution/data edge has two different kinds of edges for two dataitems. e.g. Execution have as output a dataitem and Execution have as input a dataitem

--Tommy 03:40, 13 April 2007 (MDT)--